Mathematics Behind the Linear Regression Algorithm

- Soham Shinde

- Sep 20, 2021

- 2 min read

Hello Readers,

I am not writing a blog, but uploading a picture that I have written in my book. I learned this concept linear regression and wrote in the book with graph. I would like to share the notes and we will discuss in detail.

I have discussed what is linear regression, what is cost function and how to minimize the distance from the line for each training data point. Now we have a question what is the training data point. In machine learning terms we have two sets of data in supervised learning. First is the training set and other is testing set. Training set is the data points which is used to learn from the data points what will be the predicted value. The algorithm learns from training dataset and then tests on the testing data points.

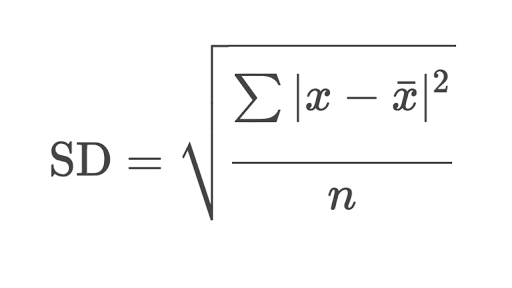

Above mentioned is the function which is called the COST FUNCTION. We can use various functions to define the cost function. I have defined the sum of squared method to minimize the cost function. This function minimizes the distance between the actual (cross) on the graph and predicted value of Yi which is perpendicular point on line.

Consider the actual point (30 , 20) on the graph. That is distance is 30 miles, then the time taken for traveling will be 20 hours. But the predicted value according to the function of line is the (30 , 15).

So, the perpendicular distance of actual point from line is 5. The cost function tries to minimize this distance from line for all the data points. Please leave a comment if you have any doubt.

Thank you for reading this blog.

Best,

Soham S. Shinde

Comments